I needed something very basic to monitor various personal servers that I look after. They’re scattered over multiple networks and behind many different firewalls, so monitoring systems designed for local networks (nagios, munin, etc.) are pretty much out of the question. Actually, this would be possible with something like a reverse SSH tunnel, but with machines scattered all around, this just adds another point of failure and provides no gains compared to a simple heartbeat sent as a cron job from the monitored machine to a central supervisor.

Email notifications

The first thing that came to mind was email. It can rely on a cloud email provider (gmail in my case), so it’s scalabe, simple to understand and simple to manage. It’s time-tested, robust and works from behind almost any firewall without problems.

The approach I found most versatile after some trial and error was a Python script in a cron job. Python was just about the only language that comes bundled with complete SMTP/TLS functionality that’s required to connect to gmail — on virtually any platform (Windows w/Cygwin, any Linux distro, Solaris).

Here’s the gist of the mail sending code.

I created a new account on my Google Apps domain, hardcoded the password into the script and installed it as a cron job to send reports once a day or once a week.

I’ve been using email already for quite some time to notify me of different finished backup jobs, but only recently have I tried this approach to monitor servers; I’ve expanded it to over 10 different machines, and let me tell you that checking ten messages a day quickly becomes a pain, since their information density is exactly zero in most cases. I needed something better.

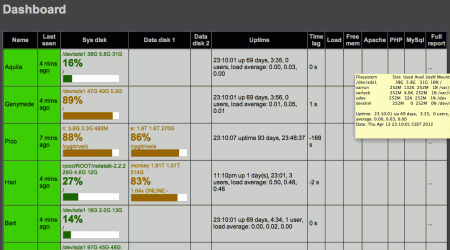

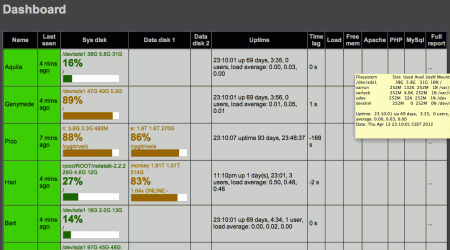

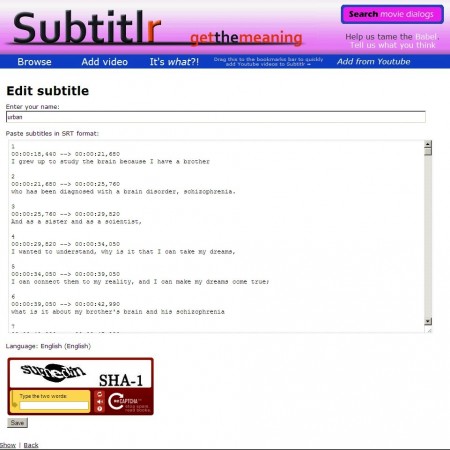

A dashboard

So I decided to build a dashboard to see the status of all the servers. However,

- I didn’t want to set it up on any of my existing severs, because that would become a single point of failure.

- I wanted cloud, but not IaaS, where the instance is so fragile that it itself needs monitoring.

- I wanted a free and scalable PaaS for exactly that reason: somebody manages it so I don’t have to.

With that in mind I chose Google App engine; I’ve been reluctant to write for and deploy to App engine before because of the lock-in, but for this quick and dirty job it seemed perfect.

I was also reluctant to share this utter hack job, because it “follows the scratch-an-itch model” and “solve[s] the problem that the hacker, himself, is having without necessarily handling related parts of the problem which would make the program more useful to others”, as someone succintly put it.

However, just in case someone miht have a similar itch, here it is:

It’s quite a quickfix allright: no ssl, no auth, no history purging; all problems were solved as quickly as possible, through security by obscurity and through GAE dashboard for managing database entries, and will be addressed once they actually surface. The cost of such minimal solution getting compromised is smaller than the investment needed to fix all the issues or change the URL. Use at your own risk or modify to suit your needs.

Here’s a screenshot; it uses google static image charts to visualize disk usage and shows basically any chunk of text you compile on the clients inside a tooltip. More categories to come in the future.

Reply

You must be logged in to post a comment.