Subtitlr retires

The agony has gone on long enough: from an idea in 2006, to a proof of concept in mid-2007, a business plan and a hopes of a start-up (under the name of Tucana, d.o.o.) in 2008, directly to the dustbin of history.

I’ve just pulled the plug and shut it down.

Its ideas were good: a wikipedia-inspired revision based subtitling and subtitle translation service, which would help spread the knowledge in the form of flash-based videoclips. It’s been obsoleted by other projects with more traction, such as DotSub and TED translations (incidentally, most of the clips I was inspired by and wanted to share with people, whose first language was not English, came from the TED itself). Now that Youtube’s language recognition and Google’s machine translation have gotten much better, there’s less and less need for painstaking transcription and all the manual work.

If I had to choose one thing to blame for its lack of success, underestimating the difficulty of transcribing video would be it. It literally takes hours to accurately transcribe a single clip, which is no longer than a couple of minutes.

I’ve tried rebranding and repurposing it into a Funny clip subtitler and at least got some fun and local enthusiasm out of that. However, it’s all a part of one big package which now needs closure.

Some ideas I’ve had were never implemented, although I thought they had great potential; I wanted to bring together large databases of existing movie and TV show subtitles with the publicly available video content in Flash Video. Since at the time almost all video on the web was FLV, there was no technological barrier. And there’s still a lot of popular TV shows, movies, etc, burried deeply in the video CDNs (Youtube, Megavideo, Megaupload), and large databases of “pointers” are maintained and curated by different communities (Surfthechannel.com, Icefilms.info). Having the video and the subtitle available instantly, without the cost of hosting large files, was a textbook Mash-up idea.

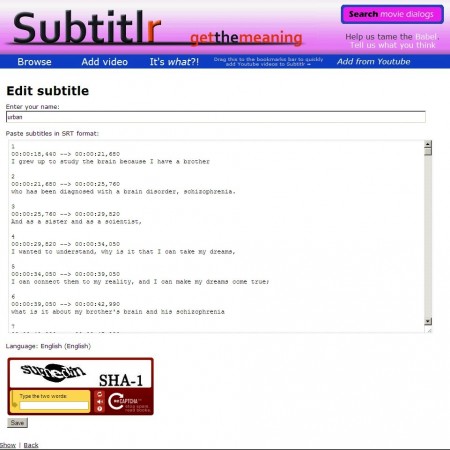

I’m posting some screenshots below, for the future me, so I can remember what I was spending countless hours of my time on. Yes, the design’s ugly, but bear in mind it was all a work of one man, eager to add functionality, and pressed into kickstarting the content generation by also transcribing and translating a bunch of videos.

Thanks to all who shared the enthusiasm and helped in any way.

Urban 19:54 on 13 Sep. 2014 Permalink

comment test