I’ve written about my small and green home server before. I love its low power consumption, integrated UPS/keyboard/screen and the small size.

But it was time for an upgrade — to a real server.

Size comparison: HP Microserver vs. Asus EEE

The reasons for an upgrade

The thing I missed most was more CPU power. The 600 MHz Celeron CPU got pretty bogged down during writes due to NTFS compression. With more and more concurrent writes, the write performance slowed down to a crawl.

Then there was a shortage of RAM. 1 GB is enough for a single OS, but I’m kind of used to virtualizing stuff. I wanted to run some VMs.

Also, I’ve been reading a lot about data integrity. This was supposed to be my all-in-one central data repository, but was based on cheap hardware with almost no data protection at all:

- My single drive could easily fail; it would be nice to have two in a mirror (also, not USB).

- Bits can randomly and silently flip without leaving any detectable signs (the infamous bit rot).

- Memory corruption does happen (failing memory modules) and more, bits in RAM flip significantly more often (memory bit rot or soft errors) than on hard drives.

So what I wanted to combat these problems was:

- a server that’s still as small, as silent and as green as possible;

- has a more decent CPU and plenty of RAM;

- supports ECC RAM (stands for error correcting);

- and can accomodate an OS with native ZFS file system.

Why worry about data integrity all of a sudden?

Well, they say the problem’s always been there: bit error rate of disk drives has been almost constant since the dawn of time. On the other hand, disk capacity doubles every 12-18 months.

This loosely translates to: there’s an unnoticed disk error every 10-20TB. Ten years ago one was unlikely to reach that number, but today you only need to copy your 3TB Mybook three times and you’re likely to have some unnnoticed data corruption somewhere. And in 5-7 years you’ll own a cheap 100TB drive full of data.

Most of today’s file systems were designed somewhere in the 1980s or early 1990s at best, when we stored our data on 1.44MB floppies and had no idea what a terabyte is. They continue to work, patched beyond recognition, but they were not really designed for today’s, let alone tomorrow’s disk sizes and payloads.

Enter ZFS

ZFS is hands down the most advanced file system in the world. Add to that some other superlatives: the most future proof, enterprise-grade and totally open-source. Its features put any other FS to shame:

- it includes a LVM (no more partitions, but storage pools),

- ensures data integrity by checksumming every block of data, not just metadata,

- automatically corrects data (for this, you need 2 copies of it — that’s why you need a mirror or copies=N setting with N>1)

- compresses data (with up to gzip-9), which is extremely useful for archival purposes and also speeds up reads

- supports on-the-fly deduplication (more info here),

- has efficient and fast snapshotting,

- can send filesystems or their deltas to another ZFS or to a file, and re-apply them back,

- can seamlessly utilize hybrid storage model (cache most used data in RAM, and a little less used data on SSD), which means it’s blazingly fast,

- integrates iSCSI, SMB (in the FS itself), supports quotas, and more.

Of course ZFS can use as much ram as possible for cache, plus about 1GB per 1TB of data for storing deduplication hashes. And since the integrity of data is ensured on the drive, it would be a shame for it to get corrupted in RAM (hence, ECC RAM is a must).

The setup

Getting all this packed inside a single box looked like an impossible goal — until I found the HP Proliant Misroserver. You can check the review that finally convinced me below.

The specs are not stellar, but it provides quite a bang for the buck.

- It’s a nice and small tower of 27 x 26 x 21 cm with 4 externally accessible drive bays and ECC RAM support;

- CPU is arguably its weakest point: Dual-core AMD N36L (2x 1.3 GHz); however, the obvious advantage of AMD over Atoms is ECC support;

- It includes a 250GB drive and 1GB ram, but I’ve upgraded that.

- upgrade 1: 2x 4GB of ECC ram; as I said, ECC is a must for a server, where a bit flip in memory can wreak havoc in a file system that is basically a sky-high stack of compressed and deduplicated snapshots.

- upgrade 2: 2x 2TB WD green; it’s energy efficient and can be reprogrammed to avoid aggressive spin-downs.

- All together, the server loaded with 3 drives consumes only 45W. It’s not silent, but it’s pretty quiet.

Here’s a quick rundown of what I’ve done with it, mostly following this excellent tutorial (but avoiding the potentially dangerous 4k sector optimization):

- I installed Nexenta Core, which is a distro combining Solaris kernel with Ubuntu userland. I’ve read many good things about it and find it more intuitive and lean than Solaris.

- Note: as Nexenta currently doesn’t support booting from a USB key, I had to use an external CD drive, which I hacked from a normal CD drive and an IDE-to-USB cable.

- I reconfigured WD Green HDDs to disable frequent spindowns.

- But: I avoided fiddling with the zpool binary from the tutorial above because it can cause compatibility issues and data loss and brings little improvement.

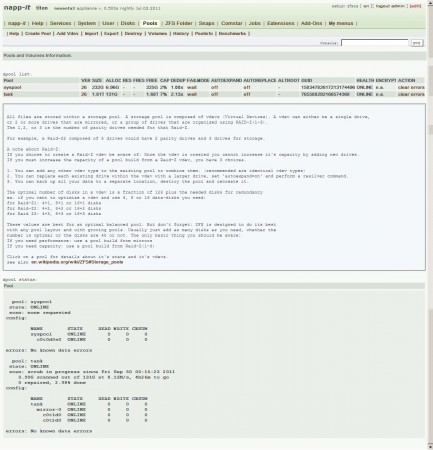

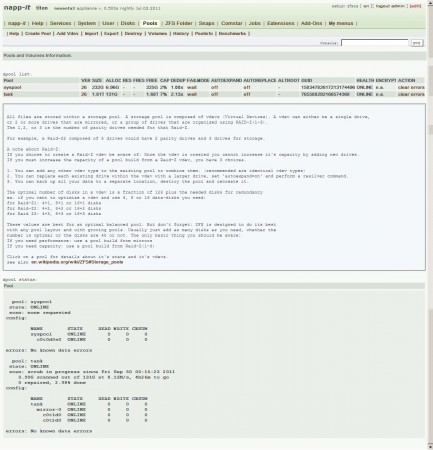

- I finally added the excellent napp-it web GUI for basic web management. This comes pretty close to a home NAS appliance with the geek factor turned up to 11. You can monitor and control pretty much everything you wish (see below for a screenshot).

Napp-it web GUI -- pool statistics

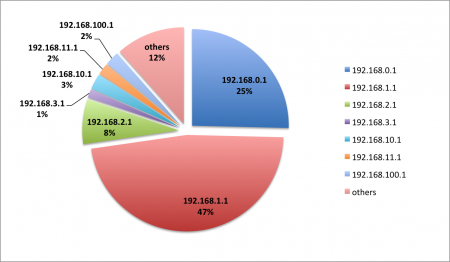

I configured two drives as a mirrored pool and created a bunch of filesystems in it. A ZFS filesystem is quite analogous to a regular directory and you can have as many filesystems (even nested ones) as you wish. Each separate ZFS can have individual compression, deduplication, sharing and mount point settings (however, deduplication itself is pool-wide).

Just for feeling, the deduplication and compression at work: with about 630GB of data currently on it (of which there’s about 500GB of Vmware backup images of three servers), the actual space occupied is 131 GB.

urban@titan:~$ zpool list

NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT

syspool 232G 6.96G 225G 2% 1.00x ONLINE -

tank 1.81T 131G 1.68T 7% 2.13x ONLINE -

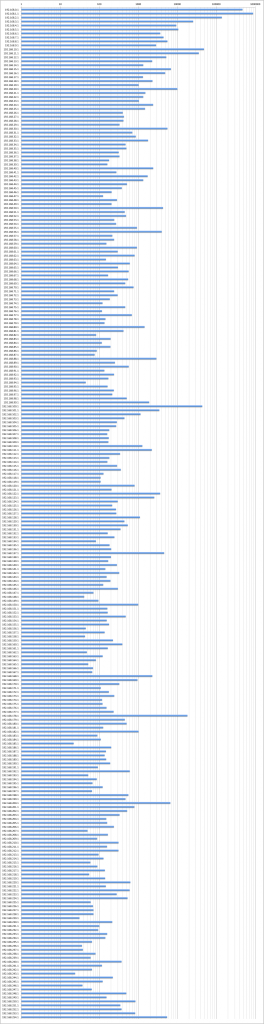

If we look at zpool debugger stats about compression and deduplication, we see there’s a lot of both going on:

urban@titan:~$ sudo zdb -D tank

DDT-sha256-zap-duplicate: 695216 entries, size 304 on disk, 161 in core

DDT-sha256-zap-unique: 1122849 entries, size 337 on disk, 208 in core

dedup = 2.13, compress = 2.04, copies = 1.00, dedup * compress / copies = 4.35

From here on

So far I’ve been more than satisfied. I’ve written about deduplication before, and this here is by far the most elegant and robust solution. Of course there’s a bunch of stuff to do next.

The first one is virtualization, and here my only option (this being a Solaris kernel) is Virtualbox. Until now I’ve sworn by Vmware, but image conversion is actually pretty straightforward (using the qemu-img tool).

The first candidate for virtualization will be my old EEE, because I still need Windows for running a couple of Windows-only services. The virtual EEE should also be able to mount the ZFS below, either via SMB or iSCSI (Microsoft does provide free iSCSI initiator which I’ve successfully used before), which should ensure smooth transition to the new server.

Reply

You must be logged in to post a comment.