A little home server that could

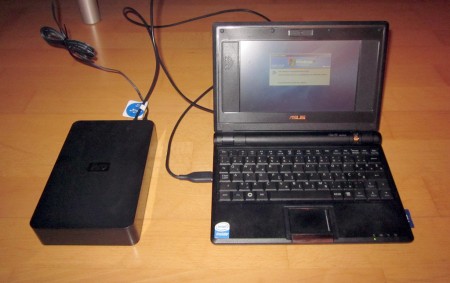

My home server is an old EEE 701 netbook with a 2TB WD elements attached. My selection process was mostly guided by three factors: I wanted a small and silent system with low power consumption.

I was considering many alternatives, among them a PC Engines Alix machine (500MHz Geode + 256MB RAM) with only 3-5 watts of power consumption, but eventually I decided on a 7″ EEE, for the following reasons:

- integrated UPS (laptop battery with >2hrs of life; saved me more than once)

- integrated monitor and keyboard (priceless when things get dirty)

- expandable RAM (I installed 1GB)

- low power consumption (with disabled wifi, screen and camera) at 7-9W

- slightly faster CPU than Alix (EEE is underclocked from 900 to 600MHz, which should extend battery life. I played with overclocking, but concluded it was not worth the fear of an occasional unexpected freeze)

- much lower power consumption than modern-day quad cores, but just as much CPU power as high-end PCs 10 years ago (much more is not needed for a file server anyway)

- small form factor

- cheap (can obtain a second-hand replacement for a small price, should one be needed)

- had one lying around anyway, collecting dust.

I run on it a n-lited Windows XP. I gave this a lot of thought and was deciding between XP, Ubuntu and the original Xandros. Still have two of them installed (on separate media: internal SSD and a SD card), but eventually XP won because of the following reasons:

- On-the-fly NTFS compression (reduces used storage on 4GB SSD system disk from 3.9GB to 2.8GB and my data from 1500 to 1200GB; in other words: with more than 1GB of junk installed in program files, the 4GB internal drive is still only 65% full; NTFS also supports shadow copies of open files and hard links for rsync link farm backups).

- Some windows-only services and utils I use (EyeFi server, Fitbit uploader, Total Commander, WinDirStat, etc.).

- Actually *working* remote desktop (as compared to various poor-performing VNC-based solutions).

- N-lited XP (with most of unnecessary services completely removed) has a memory footprint of <100 MB and easily clocks 6 months of uptime.

I do occasionally regret my choice, but for now familiarity and convenience still trump unrealized possibilities. But upcoming BTRFS might be just enough to tip the scale in favor of Linux.

What I run on it

- Filezilla server for remote FTP access (I use FTP-SSL only)

- Rsync server (Deltacopy) to efficiently sync stuff from remote locations

- WinSSHD to scp stuff from remote locations

- Remote desktop server for local access

- Windows/samba shares for easy local file access

- Firefly for exposing MP3 library to PC/Mac computers running iTunes (v1586 works best, later versions seem to hang randomly)

- A cloud backup service to push the most important stuff to the cloud

- EyeFi server, so photos automatically sync from camera to the server

- “Time Machine server”, which is nothing but a .sparsebundle on a network share, allowing me to backup my Mac machines (tutorial)

- Gmailbackup, which executes as a scheduled task.

Some other random considerations and notes

- Disable swap file when using SSD (especially such a small one).

- No more partitions for large drives: I just “split” the 2TB drive into dirs. The times of partitioning HDDs are long gone and such actions brought nothing but pain when a small partition was getting full and needed resizing. Think of dirs as of “dynamic pools”.

- No WiFi means no reliability issues, more bandwidth, more free spectrum for other users and more security.

- WD Elements runs 10ºC cooler than WD Mybook when laid horizontally and is also cheaper.

- Use Truecrypt containers to mount sensitive stuff. When machine is rebooted, they are unmounted and useless until the password is entered again.

- Use PKI auth instead of keyboard-interactive authentication for publicly open remote connections.

- Use firewall to allow only connections from trusted sources.

In any case, this is just a file/media/backup server and I’ve been quite satisfied with it so far (I’ve been using it for over a year). However, all my web servers are virtual, hosted elsewhere and regularly rsynced to this one.

dare 19:30 on 12 May. 2011 Permalink

i’m impressed. se posebej, da mas cas merit temperaturo diska v razlicnih legah 🙂

seriously, mene si preprical. pravis, da se dobijo rabljeni po 100 EUR?

ej, kaj ce bi tole cross-postal na Obrlizg? men se zdi ful fajn geeky stuff

wujetz 08:58 on 13 May. 2011 Permalink

mene tud preprical…

sam eno vprasanje – a je zadeva dovolj mocna, da postavis gor se kak game server (BF, CS,…)?

Urban 21:58 on 13 May. 2011 Permalink

@dare: tole s temperaturo sem odkril slucajno, ker vsake toliko zalaufam odlicen in zastonj CrystalDiskInfo (pove kdaj se v SMART zacnejo nabirat napake, kar pomeni da bo disk odletu). Tam je blo pa z velikimi rdecimi crkami pisalo 60ºC, kar ni good; WD Elements ne pride cez 45ºC.

za rabljene poglej na bolho; zdele lih edn prodaja za 99eur celo z 2GB rama..

@wujetz: nimam pojma kok to pozre cpuja; stock frekveca je underclockana na 630MHz, normalna je nekih 900MHz, lahko ga pa navijes celo do 1GHz (enostavno izberes brzino iz menija odlicnega utilitija http://www.cpp.in/dev/eeectl/ ).

Giga je ze solidna brzina, ampak za to mors met neko mal bolse hlajenje (vsaj ventilator na max)

za kej vec info prever forum zagrizenih userjev: http://forum.eeeuser.com/viewforum.php?id=3